解决 Rancher 2 卸载后无法重新运行的问题

- 首发:2018-12-07 18:10:07

- 教程

- 8460

因某些原因需要重装Rancher 2,本想着运行在docker中的系统应该很容易重装。但是重装后出现了很多报错。

一个Rancher集群需要Etcd、Control、Worker三个角色都正常运行,该集群才能正常工作。其中Worker代表执行任务的服务器,Etcd和Control服务至少部署一个。

重装后Etcd、Control服务均出现报错。

报错详情

其中Control则是kube-apiserver容器报Unable to create storage,报错类似:

F1207 09:28:37.357680 1 storage_decorator.go:57] Unable to create storage backend: config (&{etcd3 /registry [https://10.104.159.185:2379] /etc/kubernetes/ssl/kube-node-key.pem /etc/kubernetes/ssl/kube-node.pem /etc/kubernetes/ssl/kube-ca.pem true true 1000 0xc420516750 <nil> 5m0s 1m0s}), err (dial tcp 10.104.159.185:2379: connect: connection refused)

+ echo kube-apiserver --storage-backend=etcd3 --requestheader-allowed-names=kube-apiserver-proxy-client --cloud-provider= --client-ca-file=/etc/kubernetes/ssl/kube-ca.pem --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota --insecure-bind-address=127.0.0.1 --requestheader-client-ca-file=/etc/kubernetes/ssl/kube-apiserver-requestheader-ca.pem --insecure-port=0 --requestheader-extra-headers-prefix=X-Remote-Extra- --service-cluster-ip-range=10.43.0.0/16 --tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem --kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem --etcd-servers=https://10.104.159.185:2379 --proxy-client-cert-file=/etc/kubernetes/ssl/kube-apiserver-proxy-client.pem --bind-address=0.0.0.0 --secure-port=6443 --requestheader-username-headers=X-Remote-User --service-account-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem --etcd-keyfile=/etc/kubernetes/ssl/kube-node-key.pem --etcd-cafile=/etc/kubernetes/ssl/kube-ca.pem --etcd-certfile=/etc/kubernetes/ssl/kube-node.pem --proxy-client-key-file=/etc/kubernetes/ssl/kube-apiserver-proxy-client-key.pem --tls-cipher-suites=TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305 --allow-privileged=true --service-node-port-range=30000-32767 --kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem --etcd-prefix=/registry --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --requestheader-group-headers=X-Remote-Group --tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem --authorization-mode=Node,RBAC

+ grep -q cloud-provider=azure

+ '[' kube-apiserver = kubelet ']'

...

Flag --insecure-bind-address has been deprecated, This flag will be removed in a future version.

Flag --insecure-port has been deprecated, This flag will be removed in a future version.

I1207 09:31:08.310667 1 server.go:681] external host was not specified, using 10.104.159.185

I1207 09:31:08.310795 1 server.go:152] Version: v1.12.3

I1207 09:31:09.584521 1 plugins.go:158] Loaded 7 mutating admission controller(s) successfully in the following order: NamespaceLifecycle,LimitRanger,ServiceAccount,Priority,DefaultTolerationSeconds,DefaultStorageClass,MutatingAdmissionWebhook.

I1207 09:31:09.584545 1 plugins.go:161] Loaded 6 validating admission controller(s) successfully in the following order: LimitRanger,ServiceAccount,Priority,PersistentVolumeClaimResize,ValidatingAdmissionWebhook,ResourceQuota.

I1207 09:31:09.585194 1 plugins.go:158] Loaded 7 mutating admission controller(s) successfully in the following order: NamespaceLifecycle,LimitRanger,ServiceAccount,Priority,DefaultTolerationSeconds,DefaultStorageClass,MutatingAdmissionWebhook.

I1207 09:31:09.585208 1 plugins.go:161] Loaded 6 validating admission controller(s) successfully in the following order: LimitRanger,ServiceAccount,Priority,PersistentVolumeClaimResize,ValidatingAdmissionWebhook,ResourceQuota.

etcd容器则频繁重启:

Failed to generate kube-etcd certificate: x509: cannot parse IP address of length 0

解决思路

通过简单的docker logs xxx查看日志已经解决不了问题,报错内容也能搜索到一部分,但解决方案都无效。

初步考虑是K8s、Rancher相关的内容没卸载干净。

经过排查,不是网卡问题导致,内网访问也正常。

通过docker inspect kube-apiserver发现:

"HostConfig": {

"Binds": [

"/etc/kubernetes:/etc/kubernetes:z"

],

...

通过docker inspect etcd发现:

"HostConfig": {

"Binds": [

"/var/lib/etcd:/var/lib/rancher/etcd/:z",

"/etc/kubernetes:/etc/kubernetes:z"

],

···

以上参数说明Rancher在启动Docker容器的时候,挂载了这几个本地目录。

卸载Rancher 2的脚本

经过上面的提示,特意编写了卸载Rancher 2的脚本。

删除容器

docker kill $(docker ps -a | grep k8s | awk '{ print $1}' | tail -n +2) docker rm $(docker ps -a | grep k8s | awk '{ print $1}' | tail -n +2) docker kill $(docker ps -a | grep kube | awk '{ print $1}' | tail -n +2) docker rm $(docker ps -a | grep kube | awk '{ print $1}' | tail -n +2) docker kill $(docker ps -a | grep rancher | awk '{ print $1}') docker rm $(docker ps -a | grep rancher | awk '{ print $1}')最后两行将连同

Rancher的Web服务一起删除。以上代码一般不会影响正常运行的其它容器,除非与之重名了。删除所有数据卷

docker volume rm $(sudo docker volume ls -q)以上代码不会影响正常使用中的

volume,因为删除的时候Docker会自动报错“xxx正在被使用”。移除

K8s相关配置文件#!/bin/bash sudo rm -rf /var/etcd sudo rm -rf /var/lib/etcd sudo rm -rf /etc/kubernetes sudo rm -rf /registry echo "ok" for m in $(sudo tac /proc/mounts | sudo awk '{print $2}'|sudo grep /var/lib/kubelet);do sudo umount $m||true done sudo rm -rf /var/lib/kubelet/ for m in $(sudo tac /proc/mounts | sudo awk '{print $2}'|sudo grep /var/lib/rancher);do sudo umount $m||true done sudo rm -rf /var/lib/rancher/ sudo rm -rf /run/kubernetes/ echo "success"

至此,按照Rancher官网重装则集群可正常运行。

除特别注明外,本站所有文章均为原创。原创文章均已备案且受著作权保护,未经作者书面授权,请勿转载。

打赏

交流区

暂无内容

老师你好,我希望能用一个openwrt路由器实现IPv4和IPv6的桥接,请问我该如何实现?我尝试了直接新增dhcpv6的接口,但是效果不甚理想(无法成功获取公网的ipv6,但是直连上级路由的其他设备是可以获取公网的ipv6地)

你好

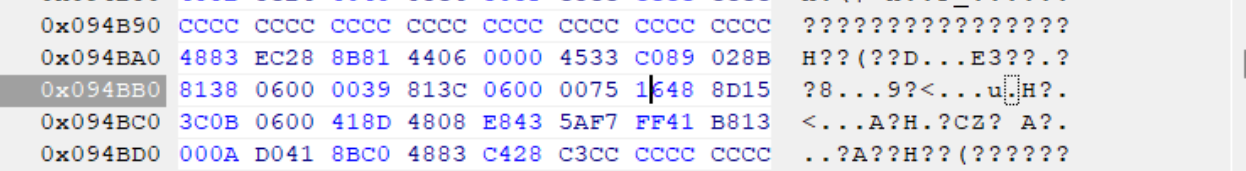

,为什么我这里是0039 813C 0600 0075 16xx xx xx,只有前6组是相同的,博客中要前8位相同,这个不同能不能照着修改呢?我系统版本是Win1124H2

大神你好,win11专业版24h2最新版26100.2033,文件如何修改?谢谢

win11专业版24h2最新版26100.2033,Windows Feature Experience Pack 1000.26100.23.0。C:\Windows\System32\termsrv.dll系统自带的这个文件,39 81 3C 06 00 00 0F 85 XX XX XX XX 替换为 B8 00 01 00 00 89 81 38 06 00 00 90。仍然无法远程连接。原来是win11 21h2系统,是可以远程链接的。共享1个主机,2个显示器,2套键鼠,各自独立操作 各自不同的账号,不同的桌面环境。

博主,win11专业版24h2最新版,C:\Windows\System32\termsrv.dll系统自带的这个文件,找不到应该修改哪个字段。我的微信:一三五73二五九五00,谢谢